See decay by probing your accounts

2019-10-10

aka use the cdk to build an account probe rig

When one creates a new account, it is pristine and useless. Then you start to dirty it up with all kinds of goodies that does stuff and, every once in a while, you have to check up on it to make sure it still is something that resembles wabisabi and not some nasty old hand bag of tissues, loose change, lipstick and used chewing gum.

For us, Cloud Custodian goes a long way, but we needed to have some weird shit checked that doesn’t quite fit its profile.

So in our never ending story of creating a “safe” space for the kids to play with their cloudy lego blocks, we have found multiple ways to make accounts say “ahh” and have a look around and optionally fix things.

- Our gran-pappy hacking tool is ansible, we came up with this… Multi Account Fondling

- We also recently discovered, the totally excellent Cloud Custodian can also do the same c7n-org

I like just had to give the new cloud vaping kid on the block, the CDK, a try since I’ve jinja2’ed enough for 2 lifetimes.

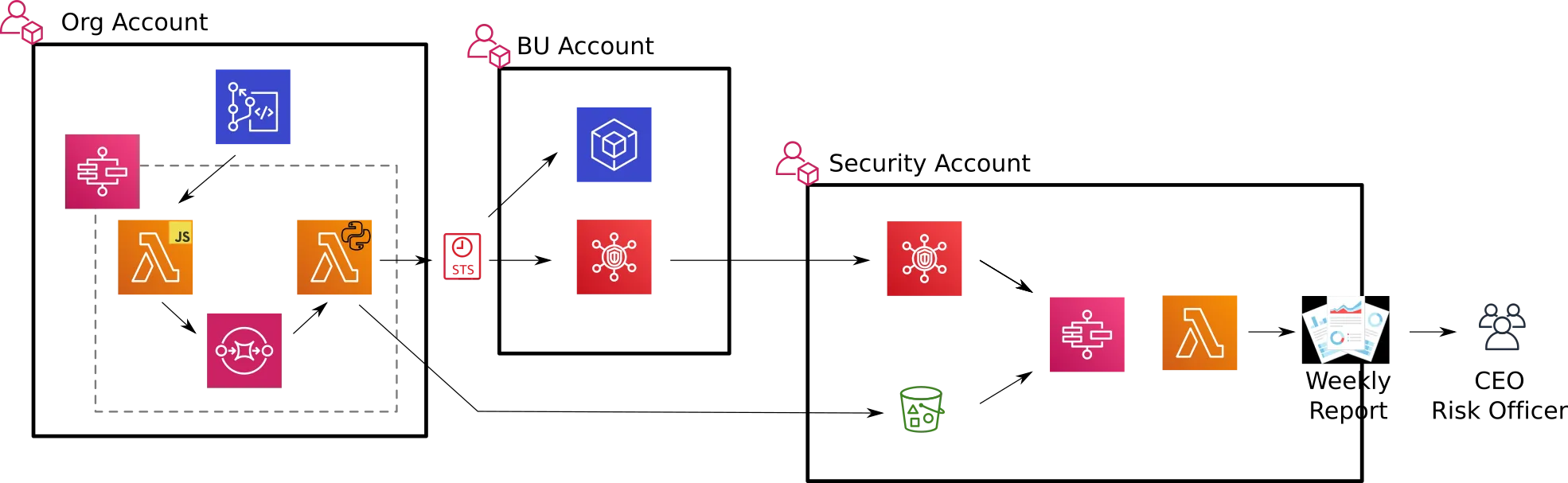

The design is basically:

- hack some js and py lambda’s

- slap it together with step functions

- shove accounts (from codecommit) into an sqs queue

- dump results in s3 in our security account

- and send some findings into the account’s securityhub (propagated to the central securityhub)

{ width=1080 }

{ width=1080 }

and, whenever the need to spam the “people who care” gets too much:

- hack another step function with a lambda

- grab the findings from securityhub and s3

- generate a markdown doc and htmlify it

- spam spam spam wonderful spam!

This post describes the first CDK stack that happens in the org account.

Seedy Kay

Being a bit keep that .ts away from me! I started with a cdk init -l javascript.

Then I slapped some mini projects into the handlers folder:

- jobControl - a node project for the codecommit and sqs bits

- prober - a python project for peeking into the accounts, with n probe modules that actually does the work

▸ bin/

▸ docs/

▾ handlers/

▾ jobControl/

▸ node_modules/

jobControl.js

package-lock.json

package.json

▾ prober/

▸ lib/

▾ probe/

__init__.py

budgets.py

iam.py

poster.py

prober.py

requirements.txt

▸ lib/

▸ node_modules/

buildspec.yml

cdk.json

package-lock.json

package.json

README.md

tsconfig.json

so it might seem a little weird to have duo lingo in the same project, but we are moving towards js but wanted to build the actual probing in python for simplicity sake.

The CDK makes it super simple to have both and CodeBuild makes sure the CDK has something to deploy.

Anyhoos - the lib of the stack looks like this…

const cdk = require('@aws-cdk/core')

const lambda = require('@aws-cdk/aws-lambda')

const sqs = require('@aws-cdk/aws-sqs')

const sfn = require('@aws-cdk/aws-stepfunctions')

const tasks = require('@aws-cdk/aws-stepfunctions-tasks')

const iam = require('@aws-cdk/aws-iam')

const events = require('@aws-cdk/aws-events')

const targets = require('@aws-cdk/aws-events-targets')

class ProberStack extends cdk.Stack {

constructor(scope, id, props) {

super(scope, id, props);

const probeBucketName = 'fooeep239012382193012'

const lebuck = { bucketName: probeBucketName } //new s3.Bucket(this, 'ResultBucket');

const leq = new sqs.Queue(this, 'proberQ', {

fifo: true,

contentBasedDeduplication: true,

})

const startBatchFunction = new lambda.Function(this, 'startBatchFunction', {

runtime: lambda.Runtime.NODEJS_10_X,

handler: 'jobControl.startBatch',

code: lambda.Code.asset('./handlers/jobControl'),

timeout: cdk.Duration.seconds(15),

environment: {

QUEUE_URL: leq.queueUrl,

},

})

const checkBatchFunction = new lambda.Function(this, 'checkBatchFunction', {

runtime: lambda.Runtime.NODEJS_10_X,

handler: 'jobControl.checkBatch',

code: lambda.Code.asset('./handlers/jobControl'),

timeout: cdk.Duration.seconds(30),

environment: {

QUEUE_URL: leq.queueUrl,

},

})

const probeFunction = new lambda.Function(this, 'probeFunction', {

runtime: lambda.Runtime.PYTHON_3_7,

handler: 'prober.handler',

code: lambda.Code.asset('./handlers/prober'),

timeout: cdk.Duration.seconds(10),

environment: {

QUEUE_URL: leq.queueUrl,

S3_BUCKET: lebuck.bucketName

},

memorySize: 1024,

})

const polStatement = new iam.PolicyStatement({

resources: ['*'],

actions: [

"codecommit:Get*",

"codecommit:List*",

],

})

startBatchFunction.addToRolePolicy(polStatement)

const polStatement1 = new iam.PolicyStatement({

resources: ['*'],

actions: [

"sts:*"

],

})

probeFunction.addToRolePolicy(polStatement1)

leq.grantPurge(startBatchFunction);

leq.grantSendMessages(startBatchFunction);

leq.grantConsumeMessages(checkBatchFunction);

leq.grantConsumeMessages(probeFunction);

const polStatement2 = new iam.PolicyStatement({

resources: [

'arn:aws:s3:::'+probeBucketName,

'arn:aws:s3:::'+probeBucketName+'/*',

],

actions: [

"s3:PutObject*"

],

})

probeFunction.addToRolePolicy(polStatement2)

const startJob = new sfn.Task(this, 'startBatch', {

task: new tasks.InvokeFunction(startBatchFunction),

resultPath: '$.guid',

});

const checkJob = new sfn.Task(this, 'checkBatch', {

task: new tasks.InvokeFunction(checkBatchFunction),

});

const numTasks = 5;

let probeTasks = []

for (let i=0; i<numTasks; i++) {

probeTasks.push(new sfn.Task(this, 'probe_'+i, {

task: new tasks.InvokeFunction(probeFunction),

}))

}

const parallel = new sfn.Parallel(this, 'paraProbes')

const loop = new sfn.Pass(this,'loop!')

const done = new sfn.Pass(this,'done!')

probeTasks.forEach(x => {

parallel.branch(x)

})

const sfndef = startJob

.next(loop)

.next(parallel)

.next(checkJob)

.next( new sfn.Choice(this, 'Job Complete?')

.when( sfn.Condition.stringEquals('$.Attributes.ApproximateNumberOfMessages', '0'), done)

.otherwise(loop))

const leSfn = new sfn.StateMachine(this, 'ProberStateMachine', {

definition: sfndef,

timeout: cdk.Duration.seconds(300),

})

const leRule = new events.Rule(this, 'ProberScheduledRule', {

schedule: events.Schedule.expression('cron(0 16 ? * * *)')

});

leRule.addTarget(new targets.SfnStateMachine(leSfn, {

input: events.RuleTargetInput.fromObject({accMask: 'foo_'})

}));

}

}

module.exports = { ProberStack }

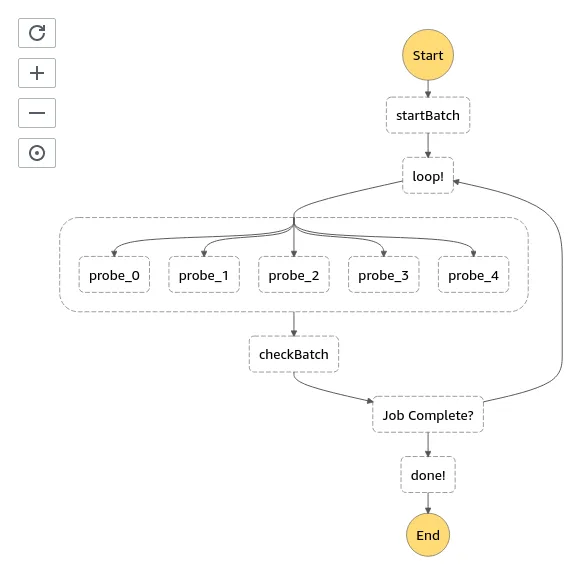

This builds a pretty little sfn number that looks like this…

{ width=1080 }

{ width=1080 }

Time is of the essence they say, so this stack runs 5 probers in parallel (1 account per prober).

The last bit kicks it off once a day via a cloudwatch event rule.

lookie le lambdas

startBatch and checkBatch

both of them are contained in the same js file…

const aws = require('aws-sdk');

const codecommit = new aws.CodeCommit();

const sqs = new aws.SQS();

const process = require('process');

const yaml = require('yaml')

exports.startBatch = async (event) => {

console.log('starting....',event)

let accre = event.accMask ? event.accMask : 'foo_'

let pa = []

let params = {

filePath: 'group_vars/all/accounts.yml', /* required */

repositoryName: 'fooeep', /* required */

};

const contents = await codecommit.getFile(params).promise();

const accounts = yaml.parse(String(contents.fileContent))

Object.keys(accounts.aws_accounts).filter( i => new RegExp(accre).test(i)).forEach(accName => {

console.log(accName)

let accNum = accounts.aws_accounts[accName]

let params = {

MessageBody: JSON.stringify({

name: accName,

number: String(accNum)

}),

QueueUrl: process.env.QUEUE_URL,

MessageGroupId: 'proberGroup'

}

pa.push( sqs.sendMessage(params).promise()

.then((response) => {

console.log(accNum,response)

return response;

})

)

})

return Promise.all(pa)

}

exports.checkBatch = (event) => {

console.log('checking',event)

let params = {

QueueUrl: process.env.QUEUE_URL,

AttributeNames: [ 'ApproximateNumberOfMessages' ],

}

return sqs.getQueueAttributes(params).promise()

.then((response) => {

console.log(response)

return response;

})

}

The startBatch handler goes and grabs our latest list of accounts from codecommit in a yaml file that looks like this

---

aws_accounts:

foo_whan: "111111111111"

foo_too: "222222222222"

foo_tea: "333333333333"

and dumps each { name: accName, number: String(accNum) } json onto the sqs queue.

The checkBatch handler literally just fetches the queue depth and returns it so sfn can figure out if it needs to go loopy again.

prober and the probes

The python lambda that slithers into all the accounts and tickles some api’s also has some multi threaded goodness (since we can’t multiprocess in lambda :[).

This allows the probes themselves to run at the same time, kicking relevant api tyres and taking numbers for securityhub findings.

The prober itself then has a cup of tea while the probes do their thing and, when they all are back, dumps their responses to a bucket in our security account and stashes any findings found in the account’s securityhub (which then makes its way to the security account also)

import sys

sys.path.insert(0,'lib')

import yaml

import boto3

import os

import json

import time

import datetime

import dateutil

import uuid

import threading

import poster

import probe.budgets

import probe.iam

sqs = boto3.client('sqs')

s3 = boto3.client('s3')

iam = boto3.client('iam')

results = {}

class ProbeThread(threading.Thread):

def __init__(self, account, creds, func):

threading.Thread.__init__(self)

self.account = account

self.creds = creds

self.func = func

self.name = func.__module__

def run(self):

print (self.name, "working")

session = boto3.session.Session( aws_access_key_id=self.creds['AccessKeyId'],

aws_secret_access_key=self.creds['SecretAccessKey'],

aws_session_token=self.creds['SessionToken'])

results[self.name] = self.func(self.account, session)

def dateConverter(o):

if isinstance(o, datetime.datetime):

return o.__str__()

def handler(event, context):

result = 'nan'

response = sqs.receive_message( QueueUrl=os.environ['QUEUE_URL'],

MaxNumberOfMessages=1, WaitTimeSeconds=5)

print ('receive', response)

if 'Messages' in response:

print (response['Messages'][0]['Body'])

account = json.loads(response['Messages'][0]['Body'])

sts_client = boto3.client('sts')

sts_response = sts_client.assume_role(RoleArn='arn:aws:iam::'+account['number']+':role/OrganizationAccountAccessRole',RoleSessionName='prober_session')

creds = sts_response["Credentials"]

probeThreads = []

probeThreads.append(ProbeThread(account, creds, probe.budgets.getInfo))

probeThreads.append(ProbeThread(account, creds, probe.iam.getInfo))

results['msg']=response

results['account'] = account

for probeThread in probeThreads:

probeThread.start()

for probeThread in probeThreads:

probeThread.join()

s3.put_object(

ACL='bucket-owner-full-control',

Bucket=os.environ['S3_BUCKET'],

Body=json.dumps(results, default=dateConverter),

Key=account['name']+'/'+time.strftime('%Y/%m/%d/%H/')+str(uuid.uuid4())+'.json')

result = json.dumps(response['Messages'][0]['Body']) + ' processed'

poster.post_finding(account, creds, results)

response = sqs.delete_message( QueueUrl=os.environ['QUEUE_URL'], ReceiptHandle=response['Messages'][0]['ReceiptHandle'])

print ('delete', response)

else:

print ('no message found')

return result

poster.post_finding is just a function that posts the findings into securityhub, like this…

import sys

sys.path.insert(0,'lib')

import boto3

import time

import datetime

import dateutil

def post_finding(account, creds, results):

session = boto3.session.Session( aws_access_key_id=creds['AccessKeyId'],

aws_secret_access_key=creds['SecretAccessKey'],

aws_session_token=creds['SessionToken'])

sechub = session.client('securityhub')

now = datetime.datetime.utcnow().replace(tzinfo=dateutil.tz.tzutc()).isoformat()

for key in results:

if 'findings' in results[key]:

for finding in results[key]['findings']:

print ('found finding', finding)

prev_findings = sechub.get_findings(

Filters = {

'AwsAccountId': [{ 'Value': account['number'],

'Comparison': 'EQUALS'

}],

'Id': [{

'Value': 'probe-finding-'+key+'-'+finding['Id'],

'Comparison': 'EQUALS'

}],

'GeneratorId': [{

'Value': key,

'Comparison': 'EQUALS'

}]

},

MaxResults=1)

if len(prev_findings['Findings'])>0:

print ('existing finding','probe-finding-'+key+'-'+finding['Id'])

ctime = prev_findings['Findings'][0]['CreatedAt']

else:

print ('new finding','probe-finding-'+key+'-'+finding['Id'])

ctime = now

sechub.batch_import_findings(

Findings = [{

'SchemaVersion': '2018-10-08',

'Id': 'probe-finding-'+key+'-'+account['number']+'-'+account['name']+'-'+finding['Id'],

'ProductArn': 'arn:aws:securityhub:eu-west-1:'+account['number']+':product/'+account['number']+'/default',

'GeneratorId': key,

'AwsAccountId': account['number'],

'Types': ['Software and Configuration Checks/Prober '+finding['Pillar']+'/'+key],

'CreatedAt': ctime,

'UpdatedAt': now,

'Severity': {

'Normalized': finding['Severity']

},

'Title': finding['Title'],

'Resources': [{

'Type': 'Other',

'Id': finding['Resource']

}],

'Description': finding['Description'],

}])

print ('posted finding', finding)

else:

print('no findings for ',key)

the first of the two probes hooked up is a “no iam users!” probe

import sys

sys.path.append('lib')

def getInfo(account, session):

iam = session.client('iam')

users = iam.list_users()

findings = []

for user in users['Users']:

try:

profile = iam.get_login_profile(UserName=user['UserName'])

findings.append( {

'Id': user['UserName'],

'Description': 'User has console access - '+user['UserName'],

'Title': 'Access Control violation',

'Severity': 75,

'Resource': account['name'],

'Pillar': 'Security'

})

print (profile)

except:

print ('no login for', user['UserName'])

return {

'result': users,

'findings': findings

}

and the second a “your budget is blown!” probe

import sys

sys.path.append('lib')

def getInfo(account,session):

budgets = session.client('budgets')

blist = budgets.describe_budgets(AccountId=account['number'])

findings = []

for b in blist['Budgets']:

if float(b['BudgetLimit']['Amount']) < float(b['CalculatedSpend']['ActualSpend']['Amount']):

findings.append({

'Id': b['BudgetName',

'Description': 'Budget '+b['BudgetName']+' exceeded - {Budget:'+b['BudgetLimit']['Amount']+',ActualSpend:'+b['CalculatedSpend']['ActualSpend']['Amount']+'}',

'Title': 'Cost Management - Budget Exceeded',

'Severity': 75,

'Resource': account['name'],

'Pillar': 'Cost Optimization'

})

return {

'result' : blist,

'findings' : findings

}

The findings basically contain

- Id which together with the account makes a unique finding

- Description - bit of text

- Title - some more text

- Severity - 0-100 seriousness :D

- Resource - the account name, so we don’t have to guess

- Pillar - the Well Architected Framework pillar associated with it

paging Doctor Deploy “Code Build, [floor], [room]”

The CodeBuild buildspec is a two runtime 3 install affair…

---

version: 0.2

phases:

install:

runtime-versions:

python: 3.7

nodejs: 10

commands:

- npm -g i aws-cdk

- cd handlers/jobControl

- npm ci

- cd ../../handlers/prober

- pip install -r requirements.txt -t lib

- cd ../..

- npm ci

build:

commands:

- cdk deploy

Thoughts I Thunked

Whoa that is a monster post, and it looks overwhelming - but it really aint.

With our 65 account gig it runs 2m30s and as we add more complex probes it will start expanding.

So on cdk, sfn and sechub…

CDK

Pretty sleek (barring the close to daily updates :]) and provides that flexibility that we had in jinja2, composability of ansible roles just in a more code friendly way.

Definitely my new favourite cloudformation puffing tool.

The mega bonus for me, is the magic iam dust it sprinkles in one line.

Step FN

So a more efficient option would have been to CodeBuild a js/py app that would negate need to queue, but the CDK makes queues and sfn is so damn easy and provides oodles of visibility and control.

I really like the idea of hooking up lambda’s together to make something that can work around the limitations (not time, now that time is 15 minutes! max, but memory or vcpus) .

Security hub

So far it seems a bit tweaked to ‘security vendors’ but, thanks to c7n showing us all how it’s done in their sechub resource all in all its pretty easy to hook up and use its stash (surely dynamo at the back) and reporting.

The alternative would have been to point Athena’s Gluey HaDoopy Warriors @ s3 and See Quilled GROUP BY’s till the cows came home.

This rig greases the wheels for our reporting bit - maybe in a later post…